Published 21:08 IST, February 8th 2024

EU’s AI ambitions may fail on two fronts

EU member states signed off on the so-called AI Act last week.

- Republic Business

- 3 min read

Talk to the machine. The European Union’s artificial intelligence ambitions may fall short. Brussels wants to enact vanguard regulation that makes the new technology safer and more competitive. But overly detailed legislation makes it more likely the EU, and emerging local champions, get left behind.

EU member states signed off on the so-called AI Act last week and sent it to the European Parliament for approval. The ambitious regulations aim to rein in emerging technology so advanced it could pose a systemic risk, while protecting privacy and explicitly prohibiting some types of profiling. U.S. President Joe Biden has taken a different tack, issuing an executive order that includes job-boosting measures as well as plans for consumer protections and foreign technology safeguards.

In trying to rein in AI, the EU is following its pattern of ambitious regulations like the Digital Markets Act, which seeks to force big U.S. tech giants like Apple and Alphabet to open up their walled gardens, and the General Data Protection Regulation, which many consumers know from the pop-ups seeking permission to deposit cookies in their browsers. However, big companies have ready incentive to challenge EU rules in court or find external workarounds, particularly since technologies are evolving fast.

It doesn’t help that the EU is internally divided on how to approach the issues. Germany, for example, has long-standing concerns about privacy, yet joined France in lobbying for more leeway for mid-sized AI startups such as Paris-based Mistral AI and Heidelberg-based Aleph Alpha. This tension plays out in how the new rules handle systemic risk. General-purpose AI models, which can apply abstract thinking and adapt to new situations, will face the toughest scrutiny if they exceed a certain threshold of computing power in training their models. This currently only applies to U.S.-based Open AI’s GPT-4 chatbot, not its EU rivals.

It’s far from clear that such a technological cutoff is an effective way of managing AI risks, let alone whether the EU can keep applying tighter rules to only the most advanced players. Another sticking point is when and how open-source coding should be treated differently from private models when used by profit-driven companies. The draft law currently exempts open-source technology from some, but not all, of the requirements.

The EU is hoping that its new rules will trigger a global rush toward stronger regulation. But it may just reinforce the U.S. lead in technology. Giants like Microsoft and Google owner Alphabet have deep pockets to finance investment in AI, access to vast amounts of data, and huge potential customer bases for new applications. Furthermore, the Brussels benchmark creates opportunities for others to adopt a lighter touch. Last year Microsoft announced big AI investments in the UK, which could design rules to be more friendly to big tech firms.

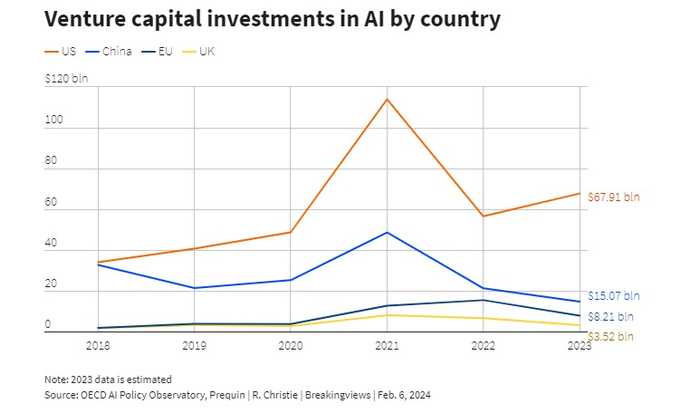

In 2023, venture capital firms invested an estimated $68 billion in AI in the United States, compared with compared to $15 billion for China and just $8 billion for the EU, according to the OECD’s AI Policy Observatory. If the EU clamps down too hard, too early on the emerging sector, innovation will follow the money elsewhere. Then Brussels will be less of a rule maker than a technology taker.

Updated 21:08 IST, February 8th 2024